Abstract

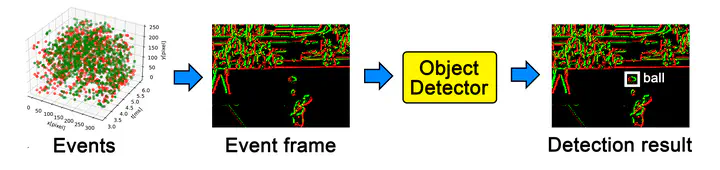

Ball detection in sports, particularly in fast-paced games like volleyball, where the ball is constantly in high motion, presents a significant challenge for game analysis and automated sports broadcasting. Conventional camera-based ball detection faces issues, such as motion blur, in high-speed ball movement scenes. To address these challenges, we propose a deep learning-based method for detecting balls using event cameras. Event cameras, also known as dynamic vision sensors, operate differently from traditional cameras. Instead of capturing frames at fixed intervals, they record individual pixel-level luminance changes, referred to as events. This unique feature enables event cameras to provide precise temporal information with low latency. Our proposed method transforms sparse events into an image format, enabling the use of current deep-learning architectures for object detection. Given the limited amount of events available for training an object detector, we generate synthetic events from RGB frames. This approach reduces the need for extensive annotation and ensures sufficient data availability. Experimental results confirm that our proposed method can detect balls that are undetectable in RGB frames and outperform existing methods that utilize event-based ball detection. Moreover, we conducted tests to verify our method’s ability to detect balls in real events, not just synthetic ones. These results demonstrate that our proposed method opens up new possibilities in sports ball detection.